Democracy After the Algorithm

How to win the next media war

- By Matt Lackey

One of the most powerful tools we have to fight injustice is technology when used to shed light, spread information, and bring our communities together. In Minneapolis and across the country, we’ve seen people from all walks of life witness wrongdoing perpetrated by our own government, pull out their phones, hit record, and watch as the images spread across the country and around the world. The same devices and algorithms that so often spread fear and division are serving as a check on the power corroding our democracy.

As a strategist sitting at the intersection of politics and technology for two decades, what I see is a blueprint for how to win the next media war.

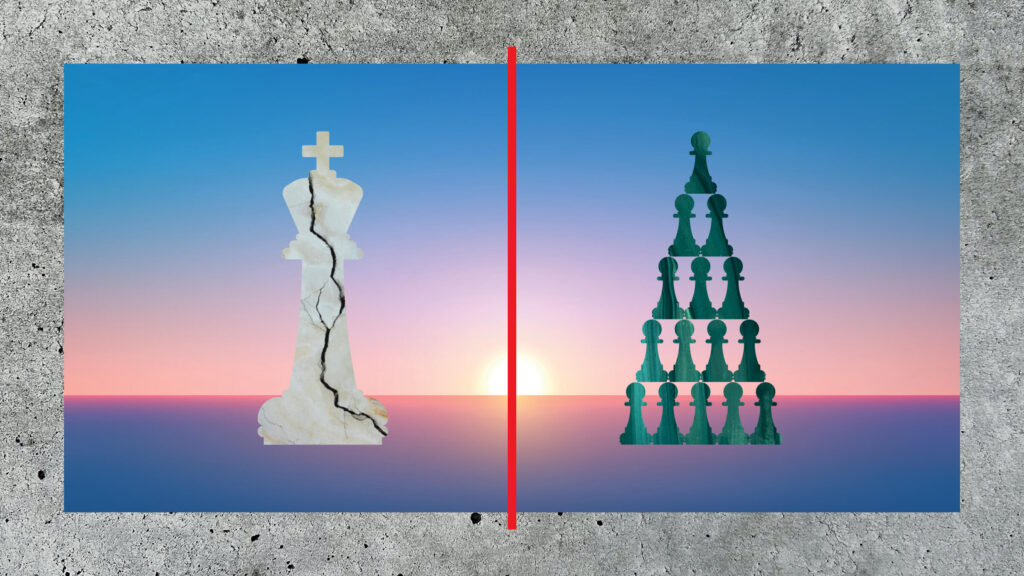

This next war is one between citizens using technology to bring people together, and those using it to tear us apart. And the battlefield is the combination of AI, algorithms, and social media.

The fundamental question we face is whether democracy can survive in a world dominated by technology—and very soon, by one that doesn’t just amplify human voices or spread real event footage across the country, but operates on its own, able to replicate, multiply, and spread across the internet with next to no friction or oversight. If the forces of fascism can make one person afraid, they can broadcast it and make everyone afraid.

We’re learning day after day that the same tools that can strengthen our communities can also supercharge those who want to undermine them. They can be used to divide, distract, and destabilize, or to connect, inform, and defend.

Right now, those who would weaken our democracy are moving fast, adapting to this new environment with ruthless clarity. Meanwhile, too many people fighting to preserve democracy are stuck arguing over old tactics and outdated strategies, approaches that already feel like the past. What worked in 2020 didn’t work in 2022. What worked in 2022 failed in 2024. What worked in 2024 will be obsolete by 2026.

The task ahead is simple but urgent: Stop optimizing for the past. Build for the future. The survival of our democracy depends on it.

How we got here

Phase 1: For most of the 20th century, media operated under conditions of scarcity. There were only a handful of TV channels, radio stations, and newspapers, all competing for the same broad audience. To survive, media had to offend as few people as possible and appeal to as many as possible. This produced a shared public conversation: Walter Cronkite, Johnny Carson, Cheers.

Phase 2: Beginning in the 1990s, the internet changed that model. Suddenly, media wasn’t just something you consumed as it was beamed to your TV or radio; it became something you could search, store, and return to at any time. Scarcity gave way to abundance. People no longer had to accept whatever was put in front of them; they could seek out content that matched their specific interests, identities, and values. Fandoms, forums, and online communities—the mundane and the strange—flourished.

Phase 3: In the 2010s, the algorithms took over. Instead of choosing what to watch or read, people increasingly let Facebook, Instagram, TikTok, and YouTube choose for them. Social feeds stopped being organized chronologically and started being optimized for engagement. The goal was no longer to inform or even entertain, but to keep users scrolling for as long as possible. The system learned what captured attention and delivered an endless, personalized stream designed to hold it. You watched more and for longer, but you enjoyed it less.

In that environment, we learned some uncomfortable truths about ourselves. When optimized for engagement, people gravitate toward tribalism. We are driven by anger, fear, and outrage. We seek validation. We get off on being emotional, angry, and conspiratorial. We revel in an endless flow of content that says, This is who your people are, and this is who they’re not.

But even that world of algorithmic manipulation already feels quaint.

The new battlefield

Generative media has changed how information is made and consumed. Platforms no longer need to search for content that might keep people engaged. They can generate it themselves instantly and at scale. What people see online is increasingly shaped by systems designed to hold attention, not by human judgment or editorial choice.

For the first time, machines aren’t just spreading messages; they’re creating them. They test what works in real time, learning which fears, grievances, and emotions keep people engaged, then adjust the content accordingly. Different people are shown different versions of reality, based on what the system predicts will provoke the strongest reaction. This isn’t theoretical. It’s already happening.

What’s at stake

The consequences are real and immediate.

Humans can’t compete with machines on speed or scale. “Authenticity” is no longer a reliable defense when technology can convincingly imitate human voices, emotions, and communities. Even the smartest, most thoughtful media consumer among us won’t be able to tell whether the person they’re watching is real or AI.

Older forms of manipulation are becoming obsolete. Troll farms and fake accounts were slow and expensive. Today, a single system can generate thousands of realistic messages, comments, and personas in seconds, flooding digital spaces with content that looks organic but isn’t.

The civic impact is already visible. By 2024, foreign actors were using advanced tools to target Americans directly. Traditional political tactics struggled to break through. Paid ads had limited effect. Social media became saturated with noise. The real influence came from algorithms that didn’t invent our divisions but learned how to amplify them—feeding anger, fear, and resentment back to users at scale.

The personal effects are just as serious. Loneliness is widespread, and digital substitutes are becoming more convincing. AI companions and algorithm-driven communities offer comfort without real connection, pulling people away from shared civic life and into personalized digital worlds.

If these systems continue unchecked, the long-term risk is a slow erosion of social cohesion. When people stop sharing facts, trust, and a sense of common purpose, cooperation becomes harder. Societies don’t fail all at once. They drift—quietly and steadily—away from the ability to solve problems together.

Out with community, in with the jackboots.

The wrong game vs. the right game

Most political and civic actors are still playing the wrong game. They are chasing raw attention—clicks, likes, shares—because those are the metrics platforms reward. But this is an unwinnable fight. Machines will always outperform humans on engagement metrics designed for them to optimize.

The right game is harder, but more important. It isn’t about maximizing attention; it’s about earning trust. That means creating content and communities that help people understand the world, connect with one another, and participate in their shared lives.

This doesn’t require abandoning technology or scale. The choice isn’t between integrity and reach. The real challenge is to build systems that move as fast and adapt as quickly as today’s engagement-driven platforms, but with different goals, systems that optimize for understanding and connection rather than reaction and division.

Think of this as infrastructure. Roads can bring people together or tear communities apart, depending on how they’re designed. Attention works the same way. If it’s built to push people toward the strongest emotion, it degrades everything around it. If it’s built to support learning, relationships, and shared purpose, it can help hold a society together.

That is the work ahead: building digital systems that make people more informed, more connected, and more capable of acting together. At scale. In real time. And in service of a healthier democracy.

How to win the future

The path forward is clear. As a society we need to build a healthier media environment that pushes people outward and into a democratic world instead of a backslide toward fascism experienced mostly in solitude and isolation.

We already know what builds healthier lives: regular activity, eating better food, and doing both with other people. And we know what builds a healthier democracy: doing anything with other people. It starts with scaled communications that nudge people toward real life: neighbors, books, houses of worship, gyms, volunteering, and local institutions that still bind communities together.

A well-designed media system would focus on moving away from individual optimization and toward getting people into shared routines, like group workouts, walking clubs, and cooking classes. AI can help coordinate that by making you aware—no matter what platform you’re on—nudging people to show up together, and reinforcing participation through shared accountability. The goal is not perfect self-improvement, but repeated, collective action that turns activity into community. Technology alone can’t do this, but it can help organize real people into real groups, which is how isolation is reversed and social fabric is rebuilt.

This is a design problem. We can build digital systems that deliver relevance and connection while reinforcing basic social norms. Systems that prioritize contribution, context, and follow-through. The same tools that power today’s engagement platforms—personalization, iteration, rapid feedback—can be used to support outcomes that leave people more informed, more connected, and better able to act together. Our attention systems should pull people toward participation, not just consumption.

That is why this mission will require collaboration among technologists, civic leaders, media organizations, and whole communities. We have to shape systems that reinforce shared reality, social trust, and connection by saying “if you like something, invite people to hang out and like it with you.” The same technologies that fragment attention can also be used to support understanding and cooperation, if we choose to build for those outcomes. Done right, these systems can compete with the worst uses of generative media, foreign and domestic. Instead of saturating feeds with rage or nihilism, they can guide people toward real communities, real learning, and real civic life. Technology doesn’t have to weaken people. It can be built to strengthen them.

We will get this wrong at times. We will make mistakes. We will experiment and adjust. We will fail, and we will learn. But playing the right game imperfectly beats playing the wrong game perfectly. The work is unavoidable. If we do not take responsibility for the media environments we live in, they will be shaped by incentives that reward division, alienation, and extremism.

The alternative is clear: a laissez-faire future where robot-accelerated nihilism fills our feeds faster than any democratic institution can respond.

This is how democracy defeats dystopia. A combination of human intelligence and AI using technology not to divide, but to bring people together. We reknit the reality that used to hold us together. We leave our bubbles. We actually go outside.

We fight the next war. And we win.

About The Author

Matt Lackey is the founder of Tavern Research, a technology company focused on democracy. For decades, he has been a leader and practitioner at the intersection of politics and technology.